As manipulated media becomes cheaper, faster, and harder to detect, the burden of identifying fraud and protecting victims increasingly falls on individuals and organisations rather than the platforms that host content. In response to this escalating threat, Cyberette, a Dutch AI startup founded in 2024, is building advanced software to detect, analyse, and explain manipulated digital content, including deepfakes, voice clones, altered images, videos, and text, with a focus on fraud investigation and high-stakes environments.

A Personal Story Behind the Startup’s Creation

Cyberette was founded by CEO Julia Jakimenko, who previously worked in data security and compliance in banking. Her decision to create the company was shaped by a personal experience when a close friend’s face and body were used without consent on fake dating profiles to scam unsuspecting victims. The emotional toll on her friend, who even sent money to one of the victims out of guilt, made Jakimenko realise how widespread and damaging manipulated media has become, particularly for women. Studies show that more than 80 per cent of deepfake explicit images target women, many of which are used in sextortion and harassment cases.

Seeing both professional and personal impacts, Jakimenko teamed up with a former colleague to build the first prototype. After exhibiting it at a major technology event and receiving strong interest from investigators and enterprises, the team expanded with early financial support from industry partners. Cyberette has since grown into a team of AI researchers, data scientists, and security experts, developing deep technical capabilities and collaborating with leading universities.

Beyond Real or Fake, A New Approach to Detection

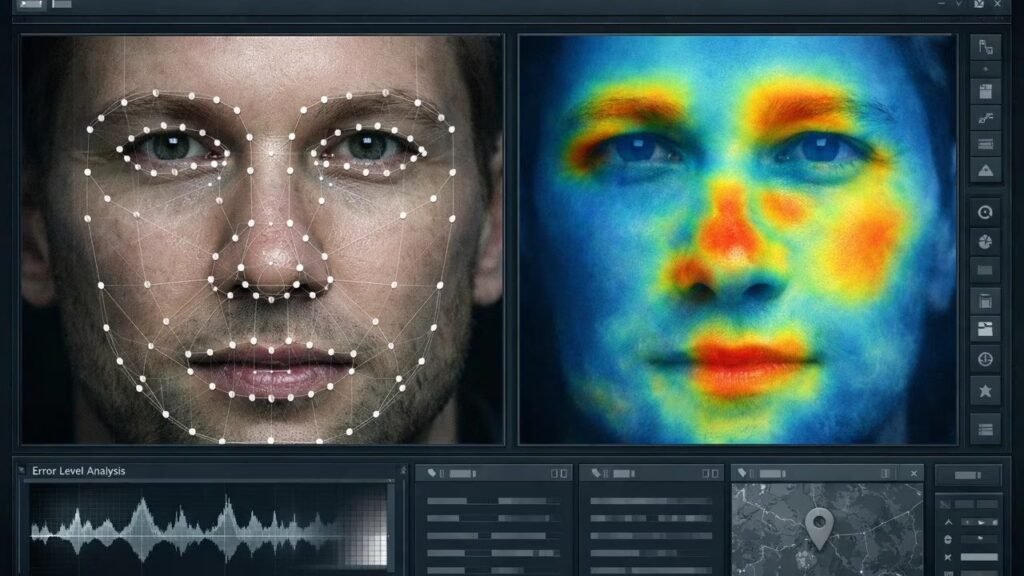

Cyberette aims to move far beyond binary detection tools. Instead of simply flagging content as real or fake, the platform analyses how content was altered, why it was altered, and the broader context around it. The system provides provenance information, manipulation patterns, likely models used, and even approximate timestamps or IP-level indicators when relevant. This deeper analysis turns detection into evidence, creating structured, actionable insights for investigative teams.

The company uses a combination of landmark-based facial analysis, heatmap anomaly scoring, sentiment and behavioural analysis, watermarking, metadata inspection, and media forensics to identify inconsistencies. Its detection engine works in real time, often under two seconds, making it suitable for live verification and security-sensitive workflows.

Built for Real-Time, High-Stakes Workflows

Unlike generic detection tools, Cyberette’s platform is designed specifically for real-world investigative and monitoring environments. Its in-house AI models are optimised for low-latency, high-precision detection across complex datasets. These models run efficiently in the cloud or fully on-premise, allowing governments, enterprises, and defence agencies to process sensitive data without relying on external servers.

For investigations, Cyberette also offers intent analysis, highlighting inconsistencies between voice, visual signals, and contextual meaning. This helps reveal the motivation or goal behind the manipulation, which is often essential in cases involving fraud, threats, or misinformation.

Enterprise-Ready, Scalable, and Secure

Cyberette’s architecture supports millions of users and billions of files, running on both GPU and CPU infrastructure to keep operational costs manageable. It is engineered for global deployment and meets stringent compliance standards including GDPR, ISO, and PII protections. The platform integrates content provenance authentication through established frameworks like C2PA, offering secure verification for governments, broadcasters, brands, and creative professionals.

Cyberette’s customers span defence threat-monitoring systems, public sector organisations, law enforcement agencies, and private-sector fraud units. For enterprises, the platform supports fraud prevention, identity verification, behavioural analysis, and content authenticity checks. It also integrates with major video conferencing tools for real-time participant verification and manipulation alerts.

Positioning for Global Growth and High-Impact Use Cases

Currently moving from pilot to full commercial rollout, Cyberette already has paying customers in sectors where manipulation poses real and urgent risk. Its work includes collaborations with forensic teams, government defence organisations, and investigations involving fraud, cyberbullying, sextortion, and kidnapping threats.

Jakimenko believes deepfakes will continue to improve in quality and scale, warning that platforms have little incentive to curb them. As manipulated content becomes more convincing and more accessible, she argues that organisations dealing directly with fraud, intelligence, and security must take the lead.

Cyberette is preparing for a Seed funding round and plans to expand its capabilities in behavioural and sentiment analysis, as well as explore earlier stages of the manipulation lifecycle. For now, the company remains focused on high-impact, high-risk cases that require speed, accuracy, and forensic-grade evidence.